Test Data Management (TDM) enables secure, realistic testing with de-identified data; DataStealth automates and streamlines this process end-to-end.

In today's data-driven software development landscape, the quality and realism of test data are key for product development.

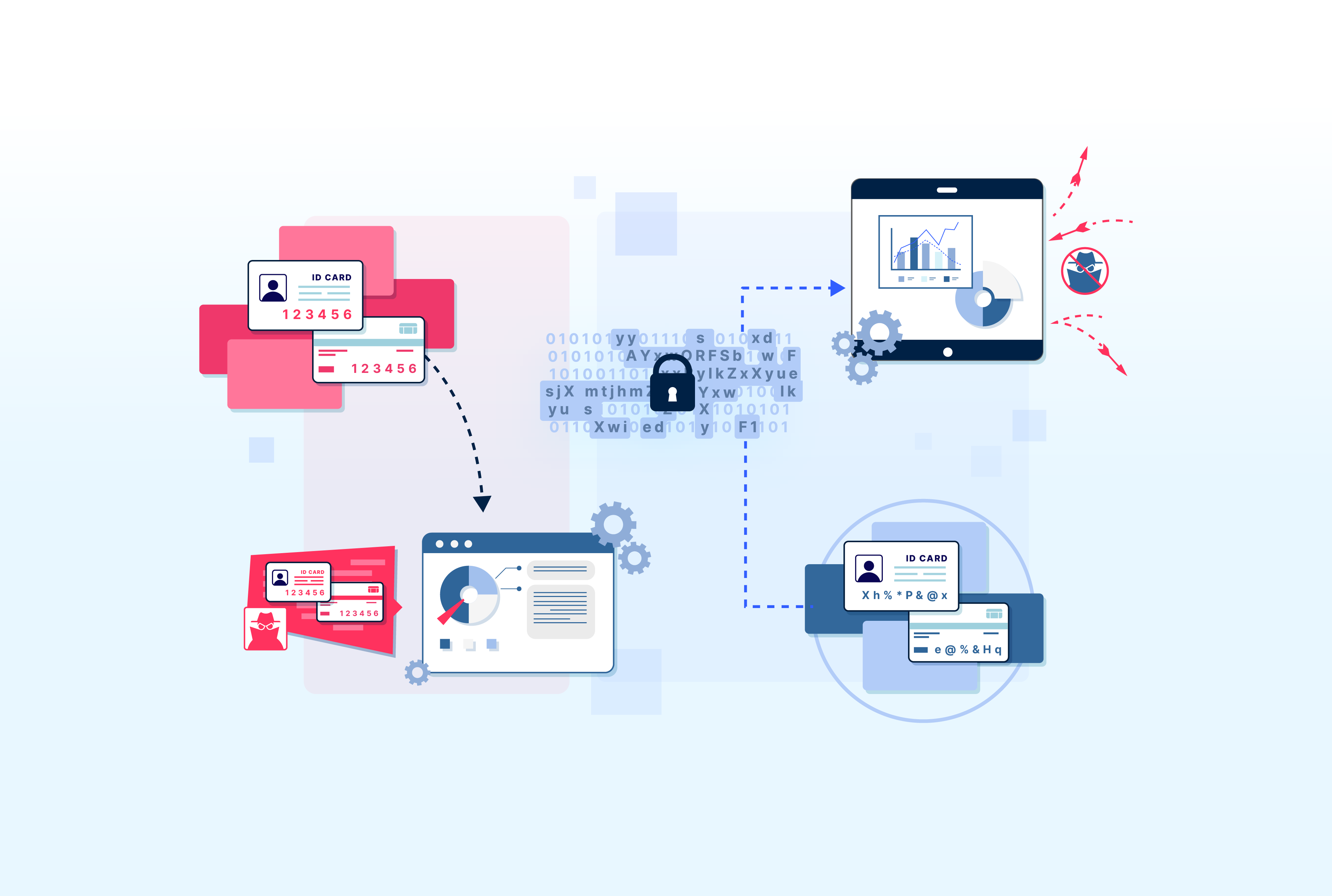

However, using production data for development and testing introduces significant security and compliance risks. This is where test data management plays a key role in both enabling your development and QA teams to work with high-fidelity data mirroring production data, but without exposing sensitive information.

Test data management (TDM) is the process of planning, designing, creating, controlling, and managing data for use in software testing and development environments.

The core objective of TDM is to provide testing and development teams with high-quality, realistic, and secure data that accurately reflects production environments without exposing sensitive information.

In practice, this involves generating high-fidelity, anonymized test data specifically for these non-production settings.

By replacing sensitive production data with meaningful yet non-sensitive substitute values, TDM ensures that test data maintains the necessary structure and usability of the original dataset. It allows development and QA teams to conduct thorough, accurate real-world-like testing without risking data breaches or non-compliance.

Effective TDM strategies aim to eliminate the use of actual sensitive data in test environments, which is a critical step in reducing risk and bolstering overall data security posture.

TDM is an effective solution to address data sprawl. It minimizes the risk of data breaches by ensuring that sensitive production data isn't exposed in less secure testing environments. TDM also prevents accidental internal PII exposure, for example, by developers in bug reports, by providing them with de-identified data.

TDM is also crucial for upholding regulatory compliance with mandates like GDPR and HIPAA, allowing organizations to demonstrate their due diligence in protecting sensitive data throughout the software development lifecycle.

TDM significantly enhances testing quality and speed. By providing high-fidelity, de-identified data that accurately reflects production scenarios, it empowers testing and development teams to create realistic test cases, perform more thorough bug detection and support more robust software releases.

Generation of this realistic test data can also be integrated into CI/CD pipelines, thus accelerating automated testing cycles.

TDM can employ different data types, often chosen based on testing needs, risk tolerance, and compliance.

This is a direct copy of live operational data. While it offers maximum realism for testing, it also carries significant risk, as it often contains sensitive information (e.g., PII, PHI, financial data, etc.). Using production data increases the likelihood of data breaches and non-compliance if it is not properly de-identified.

These are smaller, targeted portions of production data, typically aimed at improving testing efficiency by reducing data volume. However, because they only mirror a portion of the production environment, data subsets limit the ability to conduct full-scale testing. Additionally, if not de-identified, raw subsets carry the same security and compliance risks as full production data.

Masked data obscures sensitive fields in production data while the underlying source data remains unchanged.

Techniques like anonymization replace the sensitive values while preserving usability. Dynamic data masking (DDM) can apply these changes in real-time based on user access rights; this masked view is non-reversible, unlike tokenization, which is reversible.

Synthetic data is artificially generated to emulate the core statistical properties and structure of production data without containing any actual sensitive information, making it inherently secure. High-fidelity synthetic data, often created using tokenization engines "on-the-fly", aims to maintain critical aspects like referential integrity for realistic testing.

While Test Data Management offers significant benefits, organizations often encounter several challenges in implementing and maintaining effective TDM practices.

One of the most common hurdles is the slow and often manual process of providing test data to development and QA teams.

Waiting for suitable test data can create bottlenecks in the development lifecycle, delaying testing activities and, consequently, software releases.

Traditional methods of copying and sanitizing production data can be time-consuming and require significant effort from database administrators or specialized data teams.

For testing to be effective, the data used must accurately represent the variety, volume, and complexity of production data.

If test data is of poor quality, incomplete, or doesn't cover realistic scenarios, it can lead to inadequate testing.

This might result in bugs being missed, performance issues going undetected, and ultimately, a lower quality product being released.

Without the right platform, creating high-fidelity synthetic data or properly masked production data that retains business logic and referential integrity can be a complex undertaking.

Managing test data involves ongoing operational tasks, including data generation, masking, subsetting, refreshing data environments, and ensuring compliance.

This can also create a significant operational overhead, especially in large organizations with multiple applications and frequent releases.

Storing and maintaining numerous copies of test environments also consumes valuable storage resources and can increase infrastructure costs.

A significant security challenge is the proliferation of "shadow data" repositories. This occurs when copies of production data, or subsets, are made for testing or development purposes and stored in uncontrolled or unknown environments.

These shadow copies might not be subject to the same security controls as production data, making them high-value yet vulnerable targets.

The ease of duplicating data in cloud environments can worsen this issue, leading to data sprawl and increased risk of sensitive data exposure if not managed through robust TDM.

Addressing TDM challenges requires a strategic approach focused on automation, robust security measures, and maintaining data quality.

Implement automated workflows for generating and delivering test data.

Integrating TDM tools with CI/CD pipelines allows for on-demand data creation and refresh, significantly reducing delays and manual effort. This ensures that development and QA teams have timely access to the data they need.

Use methods like vaulted data tokenization and/or dynamic data masking. While dynamic data masking obscures sensitive fields (and is non-reversible), vaulted data tokenization goes a step further by substituting the sensitive data with non-sensitive, format-preserving tokens that have no mathematical link to the original data (and is reversible).

Vaulted data tokenization offers strong protection, especially in preventing data spillage from production into test environments. This technique ensures that the data used in test environments is secure, quantum-resistant, and compliant.

Even in the event of a breach, the attacker won’t find any value in the tokens as, fundamentally, they are substitute values for the actual real data.

Create test data that accurately reflects the actual complexity and attributes of the production environments.

This includes maintaining referential integrity across different data tables and systems to ensure that business rules and data relationships are preserved. The data should be realistic enough to enable thorough testing of real-world scenarios.

Opt for solutions that can create de-identified test data in real-time or "on-the-fly." This involves reading production data, de-identifying it through masking or tokenization, and writing it directly to the test environment in a single, continuous process.

This method eliminates the risky intermediate step of copying raw production data into staging areas before sanitization, while reducing storage overhead by not duplicating entire production databases for de-identification purposes.

By adopting these strategies, organizations can transform their test data provisioning work from a slow, risky, and resource-intensive chore into a secure, efficient, and value-adding component of the software development lifecycle.

DataStealth’s Test Data Management solution is specifically engineered to address the core challenges of security, efficiency, and data quality – all in one platform – by:

DataStealth reconstructs database structures in test environments and populates them with high-fidelity, de-identified data, intelligently and securely.

This process rebuilds the database from scratch, ensuring a clean and accurate foundation for testing, with options for selective data generation based on specific criteria.

DataStealth also uses a powerful anonymization engine to replace sensitive production data with meaningful, contextually accurate substitute values.

It leverages its sophisticated de-identification engine to produce high-fidelity synthetic data which preserves the look, feel, and statistical properties of the original data without exposing any real or sensitive information.

DataStealth ensures that crucial relationships between the data elements and tables are maintained, even after de-identification.

For example, if "Bob Smith" is consistently anonymized to "John Doe," this consistency is then reflected across all interconnected records, preserving business logic for accurate testing.

A key differentiator is DataStealth’s ability to create de-identified test data "on-the-fly."

Production data is read, de-identified, and written to the target test database in a single, streamlined motion.

This eliminates the risky practice of first copying entire production databases (with live sensitive data) to a staging area before sanitization, and it significantly reduces storage costs, latency, and operational overhead associated with data duplication.

By providing real-time, production-like data, DataStealth accelerates application test cycles.

Its TDM solution processes can be seamlessly integrated into CI/CD pipelines, enabling end-to-end automation for agile development and continuous testing.

DataStealth’s TDM solution is designed to work across diverse environments, including on-premises systems, cloud platforms (AWS, Azure, GCP), big data pipelines, and analytics platforms, offering consistent data protection and provisioning wherever your data resides.

The flexibility extends to the de-identification process itself, allowing for granular control to match case, size, statistical significance, and replace with like-for-like data types to meet specific testing needs.

By leveraging DataStealth, organizations can implement a Test Data Management platform that not only safeguards sensitive information and ensures compliance but also empowers testing teams with high-quality data to deliver better software, faster.

Ready to modernize your test data strategy? Schedule a demo to see how DataStealth can help.